In the light of the Wellcome Trust’s new responsible metrics mandate Lizzie Gadd reports on the findings from the Lis-Bibliometrics 2018 Responsible Metrics State-of-the-Art survey. How many of us will welcome Wellcome’s proposal and who will be running to catch up?

This week has seen a big announcement from the Wellcome Trust that as part of their open access policy alignment with Plan S, they will only fund institutions that have publicly committed to content-based evaluation approaches such as the San Francisco Declaration on Research Assessment (DORA), the Leiden Manifesto or equivalent. This is pleasing on two fronts. One, it will focus the mind of HEIs that may have put engagement with responsible metrics on the back burner. And two, they didn’t single out DORA as the only route to responsible metrics, but allowed for equivalent approaches too. Bravo.

This all begs the question as to how much of a commotion this is going to generate amongst institutions who have no responsible metrics policy and how many are well on the road to meeting this requirement. Happily, we can go some way to answering that with the results of our fourth annual ’state-of-the-art’ survey around the HE sector’s engagement with responsible metrics (RM).

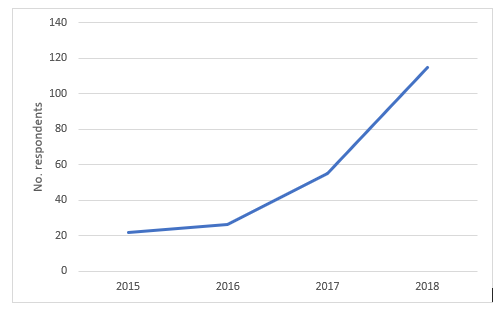

The first survey, back in 2015, had a pretty low response rate (a mere 22 respondents) as did the second in 2016. The 2017 survey saw the response rate double and this year we saw it double again with 115 respondents – and we didn’t have to try so hard to get them either. Admittedly, the lists we advertised on (Lis-Bibliometrics and ARMA Metrics SIG) have grown over the years, and others such as the US Research Assessment and Metrics Interest Group have sprung up too. The surveys are not extensive – we keep the number of questions small to generate the maximum number of responses – and we’re not claiming scientific rigour. However, with four years’ worth of data it does give us an interesting perspective on how this area has developed over time.

International response

For the first time this year we asked respondents which country they were based in. 111 told us and of these 33 (29%) were from outside the UK. Eighteen other countries were represented, with more than one response from Germany, Canada, Spain, the Netherlands, USA, New Zealand and Australia. Obviously we don’t have any historical data to compare this with, but it is certainly an encouraging sign that responsible metrics awareness spreads beyond the UK.

I’ve always made it clear when advertising the survey that we welcome responses both from those who have engaged with RM and from those that have yet to do so. When you look at the data, however, it was clear that the reason we had more respondents this year was that more people had RM activity to report. Out of the 115 respondents, 38 (exactly one-third) had either signed DORA or developed their own principles. Three of these were from non-UK institutions. A further 19 were likely to sign DORA or currently developing their own principles (five non-UK). This meant that 50% of all respondents had either committed, or were in the process of fully committing, to a RM approach of some kind. In fact only 19 (17%) were not giving RM any thought at all in their institutions.

“50% of all respondents had either committed, or were in the process of fully committing, to a RM approach of some kind.”

The rise of bespoke approaches to responsible metrics

There are really three main routes to engaging with RM. One is to sign DORA; one is to develop your own bespoke set of principles for the responsible use of metrics, perhaps based on existing guidance such as the Leiden Manifesto; and the third is to ‘do’ metrics responsibly without feeling the need to commit yourself in writing. Obviously the latter is not an option now for any institution seeking Wellcome Trust funding. However, most proponents of RM would agree that having some kind of statement is useful both to help institutions to think deeply about the issues and subsequently in enabling academic staff to hold institutions to account.

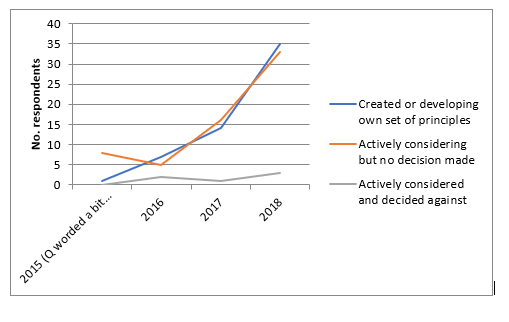

Figures 2 and 3 show the rise in engagement with DORA and bespoke RM principles. They show fairly equal levels of engagement with both. Thirty have signed and six are likely to sign DORA (36 in total) whilst 11 already had, and 24 were currently developing, their own RM principles (35). It was interesting to note that more had actually signed DORA than had actually already developed their own principles. This may be because it’s easier to sign an existing protocol than develop your own. However, the tide appears to be turning, with fewer actively considering DORA in 2018 (19) than actively considering their own principles (33). Similarly, a higher proportion had rejected DORA by 2018 (11) than had rejected the idea of developing their own principles (3).

It may be inappropriate to compare DORA to bespoke approaches in this way as they are doing two slightly different things. DORA seeks to address a specific subset of problems with the use of journal metrics in research evaluation, whilst bespoke approaches often address a much broader range of responsible research evaluation issues. The data clearly show that many HEIs don’t see them as mutually exclusive, with fourteen of the 36 (38%) who’d already signed or likely to sign DORA, having also developed, currently developing, or considering developing their own principles. Thus it appears that there is some overlap between the two communities but there are also sectors of the community who are choosing one over the other. Loughborough University is one of these and I’ve written about our decision before. This data suggests that the Wellcome Trust’s decision to allow multiple routes to responsible research evaluation was an important one.

How bespoke are bespoke approaches?

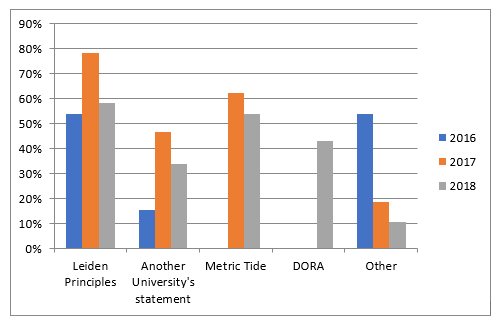

Having said that there seems to be a rise in the development of bespoke approaches to RM principles, the data does suggest that there is a heavy reliance on existing models to inspire a bespoke one. Indeed of the 68 who have created or are actively considering/developing their own principles, only four (6%) said they were not basing their principles on anyone else’s. Figure 4 shows the range of sources HEIs were drawing on. The responses to this question aren’t really comparable year on year because the Metric Tide wasn’t listed as an option until 2017, and DORA wasn’t listed as an option until 2018, however they did both appear as ‘Other’ responses in 2016 and 2017. The only conclusions we can confidently draw is that when HEIs say they are developing their ‘own’ principles’ they are not doing so in a vacuum. We can also see that the Leiden Principles are most consistently drawn upon to inspire HEIs’ own principles.

Who’s making the decisions?

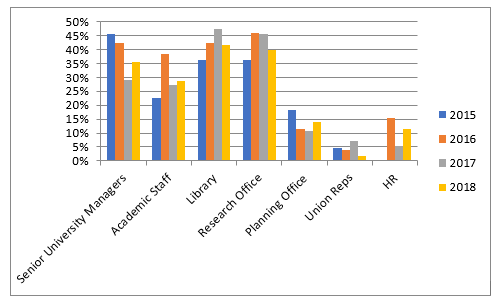

In terms of who is actually involved in developing a university’s RM policy, there don’t seem to have been any big changes over time. It is mainly Library and Research Office staff with a disappointing proportion of academic staff, a declining number of senior managers, and a smattering of planners, HR and Union reps. The introduction of responsible metrics mandates from research funders will no doubt focus the minds of Pro-Vice Chancellors, but whether they will personally get involved in policy-making or whether this will be delegated to others in the institution remains to be seen. As I’ve said before, I really believe that an institution is only ever as ‘responsible’ as its most senior decision maker in this space, and if they are not involved in RM policy-making it may not bode well for the institution’s adherence to that policy.

What difference does having a RM policy make?

One of the questions I immediately asked when I heard of the Wellcome Trust’s new policy, is how they propose to monitor compliance. Obviously, signing up to a RM policy is the easy bit – and no doubt we’ll see institutions clamouring to do so – however, implementing that policy is something else. The Wellcome Trust policy states that they “may ask organisations to show that they’re complying with this as part of our organisation audits”. However, what exactly they’ll be looking for in any audit and how far their powers can reasonably reach is as yet unknown. Are institutions just required to assess research on its merits rather than the journal it’s in? Or do they need to commit to the full gamut of DORA or Leiden Manifesto principles in order to be compliant? If the former, will Wellcome be looking mainly at recruitment and promotion practices? Or will they seek to audit all internal performance monitoring processes? And is that appropriate?

To try to understand what effect RM policies were actually having on institutions, we asked respondents who had already developed their own principles or signed DORA (38) how this had affected their use of metrics in practice. Only 24 of the 38 (63%) responded to this question and most had nothing significant to report. Seven said it was too early to say, and five said it had had no effect. One did not know. For the other eleven, some felt they were still “at the education stage” with reports of “Developing training and support materials for delivery in 2018/19”. Five felt that the main effect of their policy was to send a clear message to colleagues around responsible assessment practices. Another reported that “Our statement of principles was intended to articulate (existing) good practice” therefore “Having the statement shows our commitment research integrity, provides reassurance to researchers that their work will be fairly assessed and helps us resist blunt use of metrics.”

Only five stated that they had made changes to recruitment, promotion and metrics provision as a result of their policies. “Metrics provided by me will have responsible metrics approach [sic] and any data supplied will have RM caveats”, said one. Another wrote, “No requirement for JIF indicators on job applications or promotion documents”. This leads us to question which of these institutions would actually be deemed compliant with the Wellcome Trust policy. Assuming that just paying lip service to responsible research assessment is not enough, what is?

Who’s monitoring compliance?

Of course the threat of an external responsible metrics audit might lead more institutions to monitor internal compliance with their own policy. We asked if and how institutions were currently doing so. Twenty-three responded and again very few had an affirmative answer. Seven said they don’t monitor compliance, nine said it was too early but having a named contact was planned by one, and two were unsure. Of the remaining five, two stated that they already had a named contact for reporting non-compliance, and another had a “formal reporting mechanism”. The latter had run a survey of senior staff to understand their engagement with the policy and reported that as a consequence they had recognised the need to further promote their principles. One respondent took an interesting approach to monitoring compliance by “stimulating peer review of draft analyses in our internal Research Intelligence network”. This is an excellent idea: rather than using their policy to judge the use of metrics in their institution, they were using it as an opportunity to come alongside colleagues using metrics to co-design responsible analyses with them. Overall, though, it was surprising how few had built monitoring and review into their responsible metrics policies, when the point of those policies was presumably, to change or uphold certain standards of behaviour.

Conclusions

In the light of what looks set to be a flurry of funder responsible metrics mandates on the back of Plan S, it will perhaps be pleasing to funders to see that there is a general trend towards engaging with this agenda – and international engagement at that. It is perhaps typical of the HE community, that there is no one standard approach to RM. The Wellcome Trust’s inclusive approach of rewarding engagement with all responsible metrics approaches wherever they uphold the principle of assessing research on its merits rather than its publication venue, is therefore both a sensible and a welcome one. (See what I did there). We can only hope that other funders seeking to implement Plan S, which name-checks DORA as the “starting point” from which it seeks to “fundamentally revise the incentive and reward system of science”, will do the same.

What is less clear is what will constitute compliance and how this will be audited. And given the community’s current inability to articulate the impacts their RM approaches are having and how (if at all) they are monitoring their effect, this may be problematic. However, it is early days for most. DORA’s Steering Group Chair, Stephen Curry, takes the approach that institutions should “sign now, think later”, recognising that responsible metrics is a journey not a destination. I get this. But what funders won’t want is a new generation of tick-box policy signers who have no plans to change their institutional culture. Let’s hope that the risk of a Wellcome Trust audit, will be enough to generate real engagement with responsible metrics, and that this time next year we will see an even more mature response across the sector.

Elizabeth Gadd is the Research Policy Manager (Publications) at Loughborough University. She is the chair of the Lis-Bibliometrics Forum and is the ARMA Research Evaluation Special Interest Group Champion. She also chairs the newly formed INORMS International Research Evaluation Working Group.

Elizabeth Gadd is the Research Policy Manager (Publications) at Loughborough University. She is the chair of the Lis-Bibliometrics Forum and is the ARMA Research Evaluation Special Interest Group Champion. She also chairs the newly formed INORMS International Research Evaluation Working Group.

Unless it states otherwise, the content of The Bibliomagician is licensed under a Creative Commons Attribution 4.0 International License.

3 Replies to “Who will welcome Wellcome? Results of the 2018 responsible metrics state-of-the-art survey”