Every year for the last three years I have ‘taken the temperature’ of the HE community regarding responsible metrics using a short survey. The surveys have been advertised to the Lis-Bibliometrics Forum and the ARMA Metrics Special Interest Group. Back in 2015 I was keen to understand whether and how universities were responding to the San Francisco Declaration on Research Assessment (DORA). In 2016, following the Metric Tide report’s call to develop statements on the responsible use of metrics, I wanted to know whether universities were considering this. This year, knowing that a number of us had developed such statements, I wanted to see how this was working out in practice. Each year I have asked similar questions as well as a question addressing a current issue. Don’t get me wrong, these have not been scientific survey instruments, I went for recall over precision! However, it has enabled me to plot over time the response of universities towards responsible metrics, leading to some interesting trend data. So how is it all going?

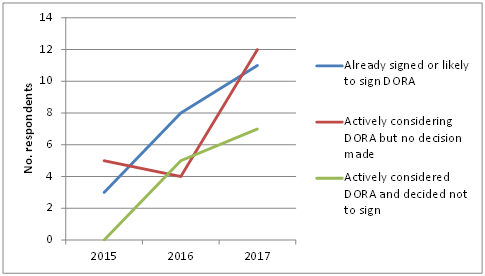

Well, the first thing to note is that the latest survey (open from 14 August 2017 and analysed on 7 September 2017) had more than twice the number of respondents (55) as those from 2015 (25) and 2016 (27). I think this in itself is indicative of the growth of engagement with responsible metrics. In terms of engagement with DORA, there was a considerable rise in the number of institutions that had either signed or were likely to sign (11) or actively considering it (12). However, there was also a sharp rise in the number of institutions that had considered signing but decided against it (7). Loughborough is one of those and some of the reasons for our hesitation are outlined in an earlier blog post.

By contrast, far fewer respondents having considered developing their own set of principles for the responsible use of metrics, subsequently decided against it (only one in 2017). In fact a larger number of respondents had either created (or were in the process of creating) their own principles (14), or actively considering it (16). (I should point out that the question I asked in 2015 was a simple Yes/No/Maybe to the idea that the institution might develop its own principles; in subsequent years the questions were more nuanced.)

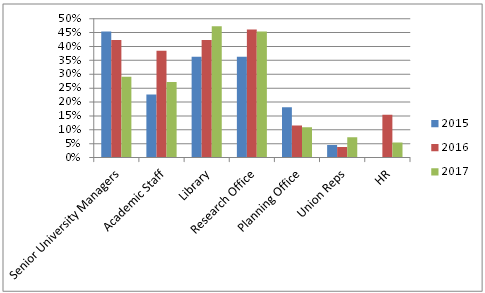

When asked who was involved with developing the statements of principle, we can see that proportionately fewer senior managers are getting involved, with more Library or Research Office staff playing a role. Academics are only involved in 22-37% of cases which I personally think is somewhat concerning in terms of buy in. Ultimately, they are the ones being measured, and are usually involved in doing the measuring. Indeed, see below for some potential consequences of this.

Finally, the 2016 and 2017 surveys asked those who were developing their own principles whether they planned to base them on any existing documentation. This year you can see a considerable rise in the number of institutions using the Leiden Principles as their foundation – almost 80% of respondents answering this question were drawing on Leiden’s work. Perhaps in response to the increasing number of universities with statements, a growing number of respondents were using these to inform their own. Just under 50% of the 2017 total claimed to be basing their statements on those of other universities, up from about 15% in 2016. The data relating to the Metric Tide report is somewhat misleading, in that this option was omitted from the 2016 survey (and accounts for the large proportion of ‘Other’ responses in 2016). In 2017, just under two-thirds of universities were basing their statements on the five Metric Tide principles (robustness, humility, transparency, diversity and reflexivity). Of the six selecting the ‘Other’ box in 2017, only one mentioned DORA (no-one mentioned DORA in 2016). Another said ‘all of the above’.

So we can see considerable growth in engagement with responsible metrics across the sector. This is a good thing. However, as Wilsdon said in response to Loughborough University’s statement in 2017, “signing up to manifestos is the easy part: the bigger challenge is to embed such approaches in institutional systems, and in the criteria used for hiring, promotion and evaluation”. He’s absolutely right of course. Now that an increasing number of institutions have either signed DORA or established their own statements, I thought it timely to ask respondents exactly what impact their efforts had had on the use of metrics at their institution.

Perhaps not surprisingly, responses were sparse – twelve in total. Two of these simply stated that it was too early to tell. One stated that it had led to promotion, support and training activity which is clearly the first step in implementing any new policy. Another said it had led to internal discussions about the effective measurement of science. One (presumably a DORA signatory) said it had reduced the use of the Journal Impact Factor at their institution. However, another DORA signatory said they “face challenges in reflecting this in operational reality, mostly because faculty do not necessarily treat the institution’s commitment to DORA as compelling them to follow its principles.”

Two respondents said that their statement simply formalised existing good practice at their institution and thus no new impacts were felt. However, one of these did state that they take even more care now with their responsible approach because “now, ‘formally’, it matters.”

I think this raises a critical point which probably applies to all policy: who owns it? I know some see signing DORA as a high-level commitment to a certain direction of travel. That’s not a bad thing, but it could end up toothless if staff don’t know it exists, don’t know how to comply and face no consequences if they don’t.

Two respondents said that their statement simply formalised existing good practice at their institution and thus no new impacts were felt. However, one of these did state that they take even more care now with their responsible approach because “now, ‘formally’, it matters.” One of the steps they now take is to routinely report confidence intervals when writing reports. The respondent wrote, “when the report user sees how wide the confidence intervals are sometimes, it does far more in practice than I could ever do to explain the limitations around bibliometrics!” They went on, “Yet, with that clearer understanding of the limitations of bibliometrics developing, we are, paradoxically, also increasingly able to undertake much more mature work with bibliometrics. Getting rid of the mystery around bibliometrics helps a lot, and questions, discussion, and debate around responsible metrics is an excellent way to open the black box and take a good look inside.”

Statements of principle on the responsible use of metrics are a great place to signal your organisation’s commitment to doing metrics well. I heartily recommend them. But they are the beginning and not the end of the journey.

At Loughborough University, we’ve felt the weight of our responsible metrics statement most keenly when starting to use bibliometrics for recruitment. Whereas we had a lot of guidance (DORA, Leiden, Metric Tide) to support us in the writing of our principles, we had no guidance at all when it came to putting them into practice. What do I mean? Well, despite Loughborough’s fairly comprehensive statement we still faced a number of questions when it came to implementation. Things like: what level of training should be mandatory for those interpreting the numbers? Are long lists of caveats enough? Should metrics be the first or the last thing a recruiter sees? What do you do if one candidate has no metrics (due to career stage, discipline or having come from industry) do you provide metrics for some candidates and explain the absence of metrics for the one? Or should you not look at metrics for any of them? Where is the onus to check if the data underlying your calculations is correct: on the candidate or the university? And should you universally trust a candidate-provided publication list or double check it with your own searches? One of the key elements of most responsible metrics principles is transparency – so how does this apply to recruitment exercises? Should you declare which metrics you will use upfront? Should you supply the candidate with the data and/or indicators you have generated? We had to take decisions on all these things. In an attempt to be squeaky clean, it took hours. (And sometimes tears).

Statements of principle on the responsible use of metrics are a great place to signal your organisation’s commitment to doing metrics well. I heartily recommend them. But they are the beginning and not the end of the journey. I would really have benefitted from some best practice guidance around this – or at least a Community of Practice I could call on to ask some sensitive questions. Discussion lists like LIS-Bibliometrics are excellent, but they are probably not the forum for this. I wonder whether some Best Practice guidance and the formation of small, known, Communities of Practice is our next step as a community of bibliometric practitioners seeking to do metrics responsibly?

Elizabeth Gadd is the Research Policy Manager (Publications) at Loughborough University. She has a background in Libraries and Scholarly Communication research. She is the co-founder of the Lis-Bibliometrics Forum and is the ARMA Metrics Special Interest Group Champion.

Elizabeth Gadd is the Research Policy Manager (Publications) at Loughborough University. She has a background in Libraries and Scholarly Communication research. She is the co-founder of the Lis-Bibliometrics Forum and is the ARMA Metrics Special Interest Group Champion.

This paints a pretty depressing picture of university’s consciousness of the deeply corrupting effect of metrics (all of them) on science. One would have thought that the flood of evidence that has come to light about the crisis of reproducibility in same areas of science, would have made senior scientists re-think their attitude to using metrics for recruiting and promoting people. Until the publish or perish culture dies, science will continue to suffer from a rash of false positive results, That isn’t a trivial problem. Every false positive provides ammunition for the Trump-like science deniers. And it is largely the fault of senior academics who impose incentives to short-cuts and dishonesty.

It must stop, before it’s too late,

LikeLike

Thanks for your comment, David. To be fair, I think most bibliometric practitioners are highly conscious of the effects of metricification on scholarship hence making best efforts to ensure that where they are used, they are used as responsibly as possible. However, as you rightly point out, the activities of universities are measured and ranked by many third parties and those of us working within simply cannot afford to ignore this. Consequently many of us are in positions where we are both having to play, whilst seeking to change, the game (see ‘My Double Life’ blog piece on The Bibliomagician). Better to have institutions that understand the issues despite having to work within them, than those with no understanding at all?

LikeLike